Recently, I’ve been working on a project and spent some hours on exploring the data security feature in Snowflake. I read some articles about Snowflake End to End Encryption and did some investigation around the Client-side Encryption (CSE). Although eventually we didn’t adopt CSE in the project, I feel it’s worthwhile sharing the knowledge with others. Some concepts and the implementation steps are not well explained in the documentation itself, hopefully in this blog I can explain it clearly to anyone who might wish to understand a bit more about the E2EE in Snowflake.

Before we go into details, there’re some key concepts which will help us to get a full picture of Snowflake end to end encryption.

Things you need to know about E2EE

In general, Snowflake provides End to End Encryption which guarantees that only end users can read the data.

- In Snowflake, all data at rest is always encrypted.

- Snowflake runtime components decrypt the data in memory for query processing.

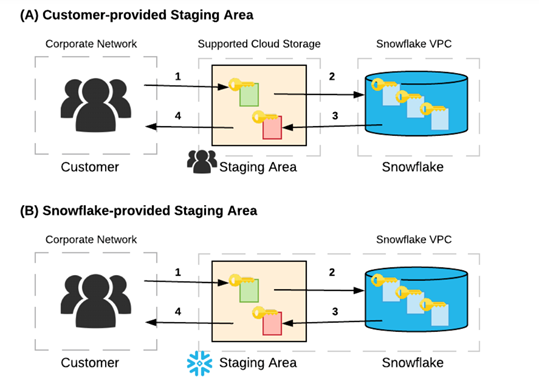

Below diagrams show the work flow of data loading (1,2) and unloading (3,4) process between customer’s on-premise network and Snowflake VPC.

Option(A) customer-provided staging area is referred as external stage in Snowflake. The data files stored in a location outside of Snowflake, it can be Amazon S3 buckets, Google Cloud Storage buckets, or Azure containers.

Option(B) the customer-provided staging area is referred as internal stage in Snowflake. Data files are stored internally within Snowflake.

If you are using Snowflake’s internal stage, then Snowflake automatically encrypt all data files by the client on the client machine prior to being transmitted to the internal stage.

If you are using external stage, the recommendation from Snowflake is to use client-side encryption. Because, Snowflake won’t encrypt the data until it’s landed into Snowflake. That means, customers need to choose the approriate encryption method and take responsibility of data security during the data transmission.

How CSE works

User encrypts data before loading it into Snowflake. The cloud storage service only stores the encrypted version of the data and never includes data in the clear. Client-side encryption follows a specific protocol defined by the cloud storage service. The service SDK and third-party tools implement this protocol.

The client-side encryption protocol works as follows:

- User creates a secret master key using OpenSSL or other tools. The master key must be a 128 bit or 256 bit key in Base64-encoded form.

- The client (SDK provided by the cloud storage service) generates a random encryption key and encrypts the file before uploading it into cloud storage. The random encryption key is encrypted with the master key generated in step 1.

- Both the encrypted file and the encrypted random key are uploaded to the cloud storage service. The encrypted random key is stored with the file’s metadata.

How does encryption works on AWS S3 bucket?

Snowflake supports both client-side Encryption and server-side Encryption. Check out the details here : AWS Data File Encryption — Snowflake Documentation

1. Client-side encryption (AWS_CSE)

AWS provides two options to enable client-side encryption, but only the second option is supported by Snowflake.

- use a customer master key (CMK) stored in AWS KMS (not supported by Snowflake)

- use a master key store within application

To help understanding the client side encryption protocol, I’ve drawn a Snowflake client-side encryption/decryption diagram which fleshes out the 3 steps of the client-side encryption protocol listed above.

2. Server-side encryption (AWS_SSE_S3 and AWS_SSE_KMS)

AWS_SSE_S3 (no additional encryption settings in Snowflake)

AWS_SSE_KMS (Optional KMS_KEY_ID value in Snowflake)

How to implement Client-side encryption in AWS?

With all the knowledge about client-side encryption and server-side encryption, I’m sure you’d like to ask how do we implement it and give it a go? Well, I’ve got all the source code which can help you do the

First, let’s see the what language does AWS SDKs for client-side encryption support:

.NET , Go, Java, PHP, Ruby, C++

Yes, NO PYTHON!!! I use this Code Example as a reference and wrote my own ruby script which will generate the master key and upload the file to S3 using client side encryption. My code in Github can be found here. Check out the readme.md file if you’ d like to have a try:

-- environment setup

1. install ruby2. install bundler: `gem install bundler`3. run `bundle install`-- generate master keybundle exec ruby generate_master_key.rb-- encrypt and upload filebundle exec ruby s3_file_upload.rb

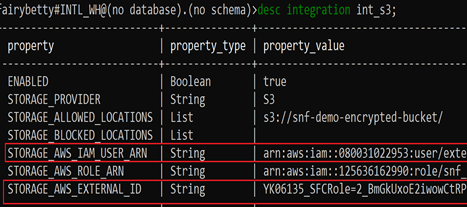

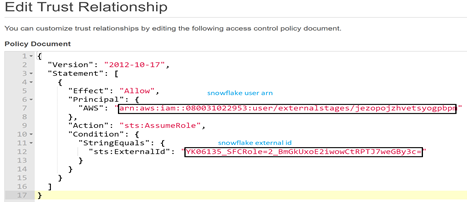

Now, to be able to read file from S3, the first thing need to do is to create a storage integration in Snowflake. Along with that, you also need to setup an IAM role and IAM policy to allow read write access from your Snowflake AWS account to your storage AWS account.

Step 1. Configuring a Snowflake Storage Integration

- Create IAM policy to access the S3 bucket

- Create the IAM role and attach the previous IAM policy. Make sure that you select “another AWS account” as the trusted entity type and record the role ARN

- Create Cloud Storage Integration in Snowflake

use role accountadmin;

CREATE OR REPLACE STORAGE INTEGRATION int_s3

TYPE = EXTERNAL_STAGE

STORAGE_PROVIDER = S3

ENABLED = TRUE

STORAGE_AWS_ROLE_ARN = 'arn:aws:iam::125636162990:role/snf_access_iam_role'

STORAGE_ALLOWED_LOCATIONS = ('s3://snf-demo-bucket/','s3://snf-demo-bucket-sse-s3','s3://snf-demo-bucket-sse-kms-own-key','s3://snf-demo-bucket-sse-kms-aws-managed');desc integration int_s3;grant usage on integration int_s3 to role r_dev;

4. Retrieve the AWS IAM user for the Snowflake account

5. Grant the IAM user permissions to access s3 bucket.

The Second thing to do is to create an external stage which you will specify the encryption type as AWS_CSE and the master key.

Step 2. Configuring a Snowflake External Stage

- Create external Stage

use role r_dev;create or replace stage stg_s3_cse url='s3://snf-demo-bucket'

storage_integration=int_s3

encryption=(TYPE='AWS_CSE' MASTER_KEY='cD5dmWZ6N5xj9hOMdG4q/X3jH8c/hS0jkPYKowD3VBw=');

2. Create external table and run the query!

CREATE OR REPLACE EXTERNAL TABLE ext_tbl_test_cse

LOCATION = @stg_s3_cse

FILE_FORMAT = (FORMAT_NAME = 'ff_test');select * from ext_tbl_test_cse;

Implement Client-side encryption in Azure

First, let’s see the what language does Azure SDKs for client-side encryption support:

- Java

- .NET

- Python

Check out this Code Example if you need to use Azure containers.

Enable both CSE and SSE

Actually, you can enable both client-side encryption and server-side encryption at the same time. Yes! you can enable both of them together!

Below code output shows the header of a Client-side encrypted file in two S3 buickets: the first one (S3 bucket snf-demo-bucket)is CSE only — which means only the file is encrypted at client side. The second one (snf-demo-bucket-sse-kms-own-key) is CSE plus SSE KMS which means the file is client-side encrypted plus the S3 bucket is SSE enabled.

SH@PC4006:~/tmp$ aws s3api head-object --bucket snf-demo-bucket --key my_data.csv

{

"AcceptRanges": "bytes",

"LastModified": "2020-12-10T12:29:42+00:00",

"ContentLength": 64,

"ETag": "\"35a656bbaaf625b69ed7456ec816eb92\"",

"ContentType": "",

"Metadata": {

"x-amz-key": "jhXdJgKAZOtFsFCd89C9NpZJifOSgjB0DO+dFxOAZdrCxKNMjX2J78mp1g8R9Bs8",

"x-amz-unencrypted-content-length": "56",

"x-amz-matdesc": "{}",

"x-amz-iv": "xzU4D/gtDV8vI8hwaa3eEg=="

}

}

SH@PC4006:~/tmp$ aws s3api head-object --bucket snf-demo-bucket-sse-kms-own-key --key my_data.csv

{

"AcceptRanges": "bytes",

"LastModified": "2020-12-10T12:39:08+00:00",

"ContentLength": 64,

"ETag": "\"5fb541ab44c1ba7ef78bb04a3ef8baf6\"",

"ContentType": "",

"ServerSideEncryption": "aws:kms",

"Metadata": {

"x-amz-key": "N7kdXRuGRwmdCsq7l8pEBuXtxd8j+egkmLNgAk24MwTCxKNMjX2J78mp1g8R9Bs8",

"x-amz-unencrypted-content-length": "56",

"x-amz-matdesc": "{}",

"x-amz-iv": "V+16SisrhH4N0AOkwYFeFA=="

},

"SSEKMSKeyId": "arn:aws:kms:ap-southeast-2:125636162990:key/2b9782a8-44ff-4438-84e4-71a7fa61bf9d"

}

I’ve done all the tests with the combination as shown below and they all worked!

In short, when creating the stage, you specify the encryption type as AWS_CSE no mater you have server-side encryption enabled or not. All configuration remain the same. The only exception is that if you use server-side encryption with your own KMS master key, you need to modify the IAM role to allow kms:decrypt.